ChimpFACS

(A FACS system adapted for chimpanzees)

What ChimpFACS is:

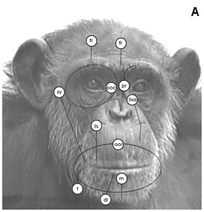

The Chimpanzee Facial Action Coding System (ChimpFACS) is a scientific observational tool for identifying and coding facial movements in chimpanzees. The system is based on the facial anatomy of chimpanzees and has been adapted from the original FACS system used for humans created by Ekman and Friesen (1978). The ChimpFACS manual details how to use the system and code the facial movements of chimpanzees objectively. The manual and certification is freely available (see below).

More info regarding the development of this FACS system can be found here:

Parr, L. A., Waller, B. M., Vick, S. J., & Bard, K. A. (2007). Classifying chimpanzee facial expressions using muscle action. Emotion, 7(1), 172.

What ChimpFACS isn't:

ChimpFACS is not an ethogram of facial expressions, and does not make any inference about any underlying emotion or context causing the movement. Instead this is an objective coding scheme with no assumption about what represents a facial expression in this species. It will not explicitally teach you chimpanzee facial expressions.

Accessing the manual

Accessing the Test

The ChimpFACS Manual is freely available via the link below. For access to the manual, please contact us on animalfacsuk@gmail.com with the following information.

- Name

- Institution (if applicable)

- Reason for requesting ChimpFACS

You will be then provided with a password to access the manual at the following link:

Please allow 7 working days for a response.

- PlInstitution

- Reason for

To become a certified ChimpFACS coder, we encourage you to take the associated test. The ChimpFACS test involves trainees to accurately code the facial movements in a series of video clips.

The materials for the test, and further instructions, can be provided upon request via email (animalfacsuk@gmail.com)

The people behind it

ChimpFACS was developed with the support of The Leverhulme Trust. Research Interchange Grant (F/00678/E) “Chimpanzee emotions: Development of a Chimpanzee Facial Action Coding System” was awarded to Kim A. Bard (University of Portsmouth)

ChimpFACS was developed thanks to the joint effort of:

- Kim A. Bard, University of Portsmouth

-

Marcia Smith Pasqualini, Avila University

-

Lisa Parr, Yerkes National Primate Research Center

- Bridget M. Waller, Department of Psychology, Nottingham Trent University.

-

Sarah-Jane Vick, Stirling University

Acknowledgments

The idea for this project blossomed while Kim A. Bard, Marcia Smith Pasqualini and Lisa Parr attended the conference, ‘Feelings and Emotions: The Amsterdam Symposium’, in May of 2001. With the considerable support of Paul Ekman and Frans de Waal as independent referees, we were successful in obtaining financial support from The Leverhulme Trust.

In 2002, Sarah-Jane Vick and Bridget Waller began the process of developing ChimpFACS. Along the way, we received warm and essential support from Paul Ekman, Susanne Kaiser, and Harriet Oster. The anatomical basis for ChimpFACS was made possible by enthusiastic collaborations with Anne Burrows, Andrew Fuglevand and Katalin Gothard , and the Yerkes National Primate Center. The essential images of chimpanzee faces were collected with the generous assistance of Chester Zoo, Samuel Fernandez-Carriba and William D. Hopkins (the Yerkes National Primate Research Center of Emory University, and the Madrid Zoo), Charles Menzel (Language Research Center of Georgia State University) and The Primate Research Institute, Kyoto University.

In March 2005, with the support of the Centre for the Study of Emotion, and Vasudevi Reddy, Head of Department of Psychology, we held an international conference and workshop to display our accomplishments. Special thanks to Anne Pusey and The Jane Goodall Institute for use of videoarchives of the Gombe Stream chimpanzees.

In April 2006, with the invaluable assistance of Paul Marshman and the Department of Psychology at Portsmouth, we disseminate the ChimpFACS via this website.